Recently, I asked how much evidence was contained in a single coin toss:

After seeing the outcome of this single coin toss [which came up heads], how much more should you believe my claim that the coin always comes up heads, compared to what you believed before the coin toss?

Many people submitted answers here on the blog and also on Hacker News, where the question led to an interesting discussion. Before I get to my answer, however, let’s talk about the question.

I like this question because it’s simple yet offers ample opportunity to explore something valuable but often unappreciated: weak evidence. Here we have the evidence of a single coin toss that comes up heads. That’s not much to go on. But it is something, and we would be wrong to ignore it.

Nevertheless, I’ve witnessed many experts ignore weak evidence, doctors in particular. The problem with ignoring weak evidence is that it’s abundant. Think of it as “long-tail” evidence: there’s so much of it that even if each piece is worth only a tiny bit, as a whole it’s worth a ton. So, if you don’t know how to mine it, you’re leaving a ton of potential knowledge buried within that long tail.

Interpreting evidence (weak or otherwise)

So, let’s talk about the evidence of our coin toss. My question was how much your prior beliefs about my claim (that the coin always comes up heads) should be swayed by the outcome of that single coin toss. I’m not asking about the coin, but about your state of knowledge about the coin and, more specifically, how that state should change in light of the coin toss.

There are many ways to approach the question, but to start, let’s define some notation. We’ll let P(X) denote our degree of belief in the proposition X, some statement that can be either true or false. Let P(X) = 0 represent our absolute conviction that X is false, and P(X) = 1 our absolute conviction that X is true. Values in between represent degrees of belief in between. When P(X) = 1/2, for example, right in the middle, it represents that we have no reason to believe that X is more likely to be true than false. If we know nothing about X, then, our default value for P(X) must be 1/2.

Let’s be clear that X is either true or false, regardless of what we think. Our X represents some real property of the universe, and the universe doesn’t alter itself just because our thoughts about it change or because we do a mathematical calculation that we think describes it in some way. That’s why we write P(X): the P notation represents that we’re not talking about X itself but rather our belief in X. The P(·) can be read as “the probability of” (or “the plausibility of”), so P(X) represents “the probability of X.”

Instead of some placeholder X, let’s define some real propositions that relate to our coin toss:

- S: the coin is a special coin that always comes up heads when tossed

- H: we observe the coin to come up heads in a coin toss

- T: we observe the coin to come up tails in a coin toss

- K: our prior knowledge about the coin, the universe, and everything

That last one, K, is important. It’s a massive proposition, the logical conjunction of many smaller propositions that represent everything we already know – that the Earth is approximately spherical, that gravity pulls things toward one another, that the author of this blog post is exceedingly handsome, and so on. This massive proposition is often left out of probability calculations with the understanding that it’s implied, but I’m going to include it because it makes our assumptions more explicit.

Now, the probabilities we’re interested in:

- P(S|K): our belief that the coin is a special heads-always coin, in light of our prior knowledge

- P(S|H∧K): our belief that the coin is a special heads-always coin, in light of our prior knowledge and the knowledge that we observed the coin to come up heads in a coin toss

I’ve introduced some new notation. The vertical bar (|) is read as “given” and can be interpreted to mean “in light of the following.” The ∧ operator is new, too. It represents logical conjunction and can be read as “and.” For instance, A∧B represents the proposition that both propositions A and B are true; and P(S|H∧K) represents the probability that S is true, in light of both H and K being true.

The first probability, P(S|K), is sometimes called our prior probability because it represents how much we believe S before considering new evidence, when we have only our prior knowledge K to go on. The second, P(S|H∧K), is sometimes called our posterior probability because it represents how much we believe S after considering the new evidence H, too.

Now, how do we update our prior beliefs about the coin to arrive at our posterior beliefs, in light of having witnessed the coin toss come up heads? Let’s think about this updating process for a moment.

Our new beliefs about the plausibility of some proposition X, in light of new evidence E, ought to be the same as our prior beliefs about X, but adjusted to account for observing the new evidence. The adjustment factor, according to Bayes’ rule (and justified by Cox’s theorem), is given by a quotient: the plausibility of observing the new evidence, given that X is true, divided by the plausibility of observing the new evidence in any case. (And, of course, all of these adjustments occur in light of our prior knowledge K about the universe in general.)

As a pseudo-English equation, Bayes’ rule is surprisingly intuitive:

(new plausibility) = (old plausibility) × (evidence adjustment),

or, equivalently, using our probability notation:

P(X|E∧K) = P(X|K) × [ P(E|X∧K) / P(E|K) ].

The evidence adjustment itself may not seem so intuitive, but it does make sense. It is the quotient of two plausibilities: that of observing the evidence E given that the proposition X is true, and that of observing E regardless. You can think of the adjustment as quantifying how well the proposition uniquely explains the evidence.

For example, if the proposition being true is the only reasonable explanation for the evidence, observing the evidence ought to provide strong support for the proposition. If rain is the only way that every house in the neighborhood gets wet at the same time, knowing that every house in the neighborhood is currently getting wet provides strong support to the proposition that it is raining. On the other hand, knowing that somebody is carrying an umbrella provides weaker support because things besides rain can also explain that evidence, the anticipation of rain, for one.

Getting back to my original question, I asked how much more you should believe my claim S (that the coin always comes up heads) after observing the evidence H (that the coin did come up heads when you tossed it). That is, I’m asking you to characterize the new plausibility in light of the old. The relative change between the two is given as follows:

[ (new plausibility) / (old plausibility) ] – 1

This quantity, we can see from Bayes’s rule, is merely our evidence adjustment less one. But to calculate this value, we’ll first need the probabilities the calculation is likely to require. Let’s see, what do we already know?

Representing our knowledge

First, our prior knowledge K informs us that a coin toss is understood to have only two potential outcomes: heads and tails. A coin toss is considered invalid, for example, if the coin stands on edge or is tossed into a chasm. Therefore, a coin toss must result in heads or tails:

P(H∨T|K) = 1,

and getting tails is the same as not getting heads:

P(T|K) = P(¬H|K).

More notation: we use ¬ to denote “not” and ∨ to denote logical disjunction, read “or.”

Next, we know that if the coin is special, it will come up heads when tossed:

P(H|S∧K) = 1.

But what if the coin is not special? In that case, do we have any reason to believe it is more likely to come up heads than tails, or vice versa? No. So, we must consider each proposition equally likely:

P(H|¬S∧K) = P(¬H|¬S∧K).

Further, because there are no other possibilities – the coin must come up heads or tails – their total probability must be one:

P(H|¬S∧K) + P(¬H|¬S∧K) = 1.

If the two probabilities are equal and must sum to one, each must be one half:

P(H|¬S∧K) = P(¬H|¬S∧K) = 1/2.

At this point, you may be tempted to object that our beliefs, being overly subjective, have led us to an unjustified conclusion. Even if the coin isn’t special, how can we say it has an even chance of coming up heads (or tails), in other words, that it’s fair? What justifies this claim?

In truth, we can’t justify it. But we didn’t make it, either.

Remember, we are not making any claims about the coin. Our equations make claims only about our knowledge of the coin. If the coin isn’t special, maybe it is still biased somehow. Even so, we have no reason to believe it is more likely to be biased one way or the other. Therefore, by symmetry, we can assign only one degree of belief to either proposition H or ¬H, and that is 1/2.

The evidence-adjustment factor

With our prior beliefs represented as probability equations, let’s get back to computing that evidence adjustment.

(evidence adjustment) = P(H|S∧K) / P(H|K)

The numerator on the right-hand side we already know: P(H|S∧K) = 1.

The denominator, P(H|K), we do not. We must find some way to break it into terms that we do know.

The nice thing about propositions, like H, is that we can use Boolean logic to manipulate them. So, let’s break H into pieces that are more likely to be useful:

H = H∧(S ∨ ¬S) = (H∧S) ∨ (H∧¬S).

What I did was split the proposition that the coin comes up heads into a disjunction of two mutually exclusive propositions: that the coin comes up heads and is special, or that the coin comes up heads and is not special. That first term of the disjunction, however, is redundant: if a coin is special, our prior knowledge already tells us that it must come up heads; therefore, we can simplify H∧S to S. Now we have,

H = S ∨ (H∧¬S), given K,

and, therefore,

P(H|K) = P((S ∨ (H∧¬S))|K).

We can break up the disjunction on the right-hand side using the sum rule for probabilities, which is given as:

P(A∨B) = P(A) + P(B) – P(A∧B).

Since our disjunction is of mutually exclusive propositions, the final term of the sum-rule expansion drops out; therefore,

P(H|K) = P(S|K) + P(H∧¬S|K).

Now let’s crack that new final term, P(H∧¬S|K). To do so, we’ll use the product rule for probabilities:

P(A∧B) = P(A|B) P(B) = P(B|A) P(A).

So:

P(H∧¬S|K) = P(H|¬S∧K) P(¬S|K).

And, already knowing that P(H|¬S∧K) = 1/2, we can simplify the right-hand side:

P(H∧¬S|K) = P(¬S|K)/2.

And substituting this reduction back into the equation for P(H|K) gives,

P(H|K) = P(S|K) + P(¬S|K)/2.

We can further simplify the equation by noting that P(S|K) + P(¬S|K) must equal 1 and, therefore, that the ¬S term can be rewritten in terms of S to give,

P(H|K) = P(S|K) + (1 - P(S|K))/2 = (1 + P(S|K)) / 2.

Now, to bring it all home, let’s plug these values into our evidence-adjustment formula:

(evidence adjustment)

= P(H|S∧K) /

P(H|K)

= 1 / P(H|K)

= 1 / [(1 + P(S|K)) / 2]

= 2 / (1 + P(S|K)).

And that’s our evidence-adjustment factor. Now, what does it do?

Adjusting our beliefs in light of the new evidence

To better understand what the evidence adjustment does, let’s recall the original belief-adjustment equation:

(new plausibility) = (old plausibility) × (evidence adjustment)

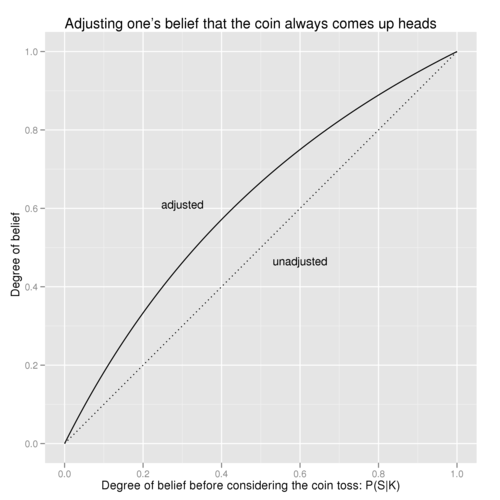

So the adjustment factor nudges our initial degree of belief, whatever it may be, one way or the other, depending on the evidence. To see the effect of this nudge for various initial degrees of belief, consider the following plot:

Looking at the plot, let’s see if the nudge agrees with our intuition. First, if we were absolutely convinced that the coin is (or is not) special, no amount of evidence should sway our beliefs. Looking at the plot, we see that when our prior probability is 0 or 1, so is our adjusted (posterior) probability, exactly what we expected.

But what if our initial knowledge is complete ignorance about the coin being special? In that case, upon seeing the coin toss, our prior probability of 1/2 gets nudged to the posterior probability of 2/3 – toward the belief that the coin is indeed special. Again, it’s what we would expect.

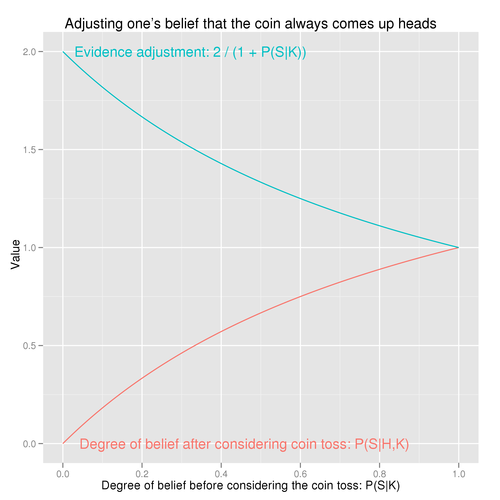

In fact, the evidence adjustment is always going to push us toward confirming the belief that the coin is special because the evidence supports that belief. The force of that push, however, depends on how surprising we find the evidence, that is, how much it challenges our prior beliefs. The following plot shows this relationship:

Note that the evidence provides the strongest push – a factor of 2 – when our prior knowledge makes us doubt most strongly that the coin is special. On the other extreme, when we are already convinced that the coin is special, observing that the coin comes up heads when tossed isn’t surprising at all, and correspondingly the evidential push of that observation is nothing: an adjustment factor of unity.

Answering the original question

Finally, with our evidence adjustment well in hand, we can answer the original question: After seeing the outcome of this single coin toss, how much more should you believe my claim that the coin always comes up heads, compared to what you believed before the coin toss?

The answer, we reasoned earlier, is the evidence adjustment less one:

(relative plausibility increase)

= [evidence adjustment] – 1

= [2 / (1 + P(S|K))] – 1

= [2 / (1 + (prior plausibility))] – 1

= (1 – (prior plausibility)) / (1 + (prior plausibility))).

So, if we let p represent our prior degree of belief that the coin is a special, heads-always coin, we should be

100% × (1 – p) / (1 + p)

more confident in our belief after seeing the coin come up heads when tossed.

And that’s the answer.

But there are other ways of arriving at it. One of the more convenient is to use odds instead of probabilities. But let’s save that discussion for next time.

Update: Here’s the promised discussion: Odds and the evidence of a single coin toss.